Limited Time Offer 👉 Get 58% off Divi AI with the Divi Pro.

What is robots.txt | Why it is too Important?

In the realm of Search Engine Optimization (SEO) and website management, robots.txt plays a pivotal role. This small yet powerful file helps control and manage how search engines and other automated agents interact with your website. In this blog, we will delve into what robots.txt is, why it’s important, how it works, and best practices for using it effectively.

Let’s get started!

What is robots.txt?

robots.txt is a text file located at the root directory of a website. Its primary purpose is to instruct web crawlers (also known as robots or spiders) about which pages or sections of the website should not be crawled or indexed. This file acts as a guideline for search engines, helping to manage and optimize the crawling process.

A bot is an automated computer program that interacts with websites and applications. There are good bots and bad bots, and one type of good bot is called a web crawler bot. These bots “crawl” webpages and index the content so that it can show up in search engine results. A robots.txt file helps manage the activities of these web crawlers so that they don’t overtax the web server hosting the website, or index pages that aren’t meant for public view.

How Does robots.txt Work?

The robots.txt file extension refers to a text file that doesn’t contain HTML markup code. Similar to other files on the website, the robots.txt file is stored on the web server. Actually, you can usually view the robots.txt file for any given website by typing the homepage’s full URL followed by /robots.txt, such as https://templatelane.com/robots.txt. Although users are unlikely to come across the file because it isn’t linked to anywhere else on the website, the majority of web crawler bots will look for it before exploring the rest of the site.

Although it contains instructions for bots, a robots.txt file is unable to enforce its commands. Before viewing any other pages on a domain, a good bot—like a web crawler or news feed bot—will try to visit the robots.txt file and will obey its instructions. A malicious bot will either process the robots.txt file to locate the prohibited webpages or ignore it.

A web crawler bot will follow the most specific set of instructions in the robots.txt file. If there are contradictory commands in the file, the bot will follow the more granular command. The robots.txt file uses a straightforward syntax to provide instructions to web crawlers.

Here’s an example of a basic robots.txt file:

User-agent: *

Disallow: /admin/

Disallow: /private/

Allow: /public/

- User-agent: Specifies which web crawler the rules apply to. An asterisk (*) means the rules apply to all web crawlers.

- Disallow: Indicates the directories or pages that should not be crawled.

- Allow: Specifies the directories or pages that can be crawled, even if they fall under a disallowed directory.

What does ‘User-agent: *’ mean?

Any person or program using the internet has a “user agent,” which is like a name assigned to them. For people, this includes details like the browser they’re using and their operating system, but no personal information. This helps websites display content that works well with the user’s system. For bots, the user agent helps website administrators identify which bots are visiting the site.

In a robots.txt file, website administrators can give specific instructions to different bots by writing separate rules for each bot’s user agent. For example, if an admin wants a page to appear in Google search results but not in Bing, they can include two sets of instructions in the robots.txt file: one starting with “User-agent: Bingbot” and another with “User-agent: Googlebot”.

Common search engine bot user agent names include:

- Google:

- Googlebot

- Googlebot-Image (for images)

- Googlebot-News (for news)

- Googlebot-Video (for video)

- Bing

- Bingbot

- MSNBot-Media (for images and video)

- Baidu

- Baiduspider

How do ‘Disallow’ commands work?

The “Disallow” command is a key part of the robots exclusion protocol. It tells bots not to visit the webpage or set of webpages specified after the command. These pages aren’t hidden; they just aren’t shown in search results because they aren’t useful for most Google or Bing users. However, if someone knows the link, they can still visit these pages on the website.

How do ‘Allow’ commands work?

The “Allow” command specifies which parts of a website can be accessed by web crawlers despite any broader restrictions set by the Disallow command. It grants permission for specific URLs or directories within a section that might otherwise be restricted. This command is particularly useful for giving access to certain content within a generally restricted area, ensuring that important pages are indexed while keeping other sections private. For example, you might disallow all content in a directory but allow a specific file within that directory to be crawled.

Why robots.txt file is too important?

- Control Over Crawling: With

robots.txt, you can specify which parts of your website you want to keep private or restrict from being indexed by search engines. This can be useful for hiding confidential information, staging areas, or duplicate content. - Optimize Crawl Budget: Search engines allocate a certain amount of resources (crawl budget) to each website. By directing crawlers to the most important pages and away from low-priority or resource-heavy sections, you can ensure that search engines efficiently use their resources on your site.

- Prevent Duplicate Content: If your website has multiple versions of the same content,

robots.txtcan help prevent duplicate content issues by blocking crawlers from accessing redundant pages. - Enhance Site Security: By disallowing crawlers from accessing sensitive directories, you can add an extra layer of security to your website, protecting against potential data breaches.

Best Practices for Using robots.txt

- Keep It Simple: Avoid overly complex rules. Simple, clear instructions are more effective and easier to manage.

- Regularly Update: As your website evolves, update your

robots.txtfile to reflect any new pages or directories you want to control. - Test Your File: Use tools like Google’s robots.txt Tester to ensure your file is correctly formatted and functioning as intended.

- Monitor Crawling: Keep an eye on your server logs or use tools like Google Search Console to monitor how search engines are interacting with your site.

- Don’t Rely Solely on robots.txt for Security: While

robots.txtcan help protect sensitive areas, it should not be your only security measure. Use proper authentication and authorization mechanisms for sensitive data. - Allow Important Pages: Make sure critical pages are not accidentally disallowed. Blocking important pages can negatively impact your SEO.

Common Mistakes to Avoid

- Disallowing All Crawlers: Avoid using

Disallow: /for all user-agents unless you want to block your entire site from being crawled. - Misplaced robots.txt File: Ensure the

robots.txtfile is in the root directory. Placing it elsewhere will render it ineffective. - Forgetting Important Directories: Regularly review your

robots.txtto ensure all important directories are included or excluded as per your requirements.

How to Create a robots.txt File?

Creating a robots.txt file is simple. Here are the steps:

- Open a Text Editor: Use a basic text editor like Notepad (Windows) or TextEdit (Mac) to create the file.

- Write the Rules: Define the user-agents and the directories or pages you want to disallow or allow.

- Save the File: Save the file as

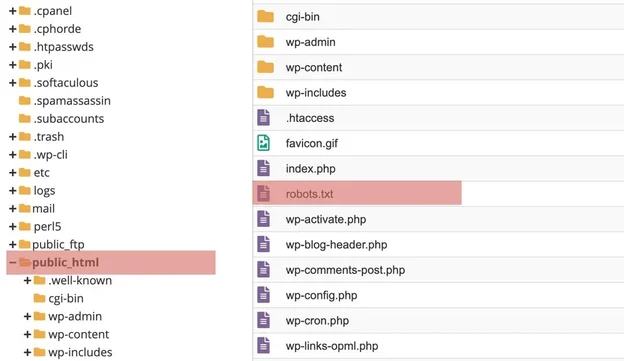

robots.txt. - Upload to Root Directory: Place the

robots.txtfile in the root directory of your website. For example, if your website iswww.example.com, therobots.txtfile should be accessible atwww.example.com/robots.txt.

Here’s an example of a basic robots.txt file:

User-agent: *

Disallow: /admin/

Disallow: /private/

Allow: /public/

How to Upload robots.txt Website’s Root Directory?

- Using FTP:

- Using cPanel:

- Log in to your cPanel account.

- Go to the

File Manager. - Navigate to the root directory of your website.

- Click on

Uploadand select therobots.txtfile from your local computer.

- Using a CMS (e.g., WordPress):

- Log in to your WordPress admin dashboard.

- Go to the plugin section and install a plugin like “RankMath“,”Yoast SEO” or “All in One SEO Pack” which allows you to manage

robots.txt. - Use the plugin’s interface to create and upload the

robots.txtfile.

- Verify the Upload:

- Open a web browser and navigate to

https://yourwebsite.com/robots.txt. - Ensure the file is accessible and the rules are correctly displayed.

- Open a web browser and navigate to

Robots.txt for WordPress website doesn’t exist

If your website is using WordPress as the platform then robots.txt file can’t be found in your root directory. Because WordPress create a virtual robots.txt file and in this case you will be needing to create a new robots.txt file using a plain text editor.

Conclusion

The robots.txt file is a vital tool for webmasters and SEO professionals, offering control over how search engines and other web crawlers interact with a website. By understanding and correctly implementing robots.txt, you can optimize your website’s crawl efficiency, protect sensitive data, and enhance your overall SEO strategy. Regularly updating and monitoring this file will help maintain its effectiveness and ensure your website remains search engine-friendly.